Start

In the cosmic journey of our daily communications, a new frontier is emerging at the intersection of quantum mechanics, artificial intelligence, and human psychology. It's a realm where the cold, unfeeling interfaces of our digital devices begin to understand and respond to the rich tapestry of human emotions. This is the world of Emotion-Responsive Communication Interfaces (ERCIs).

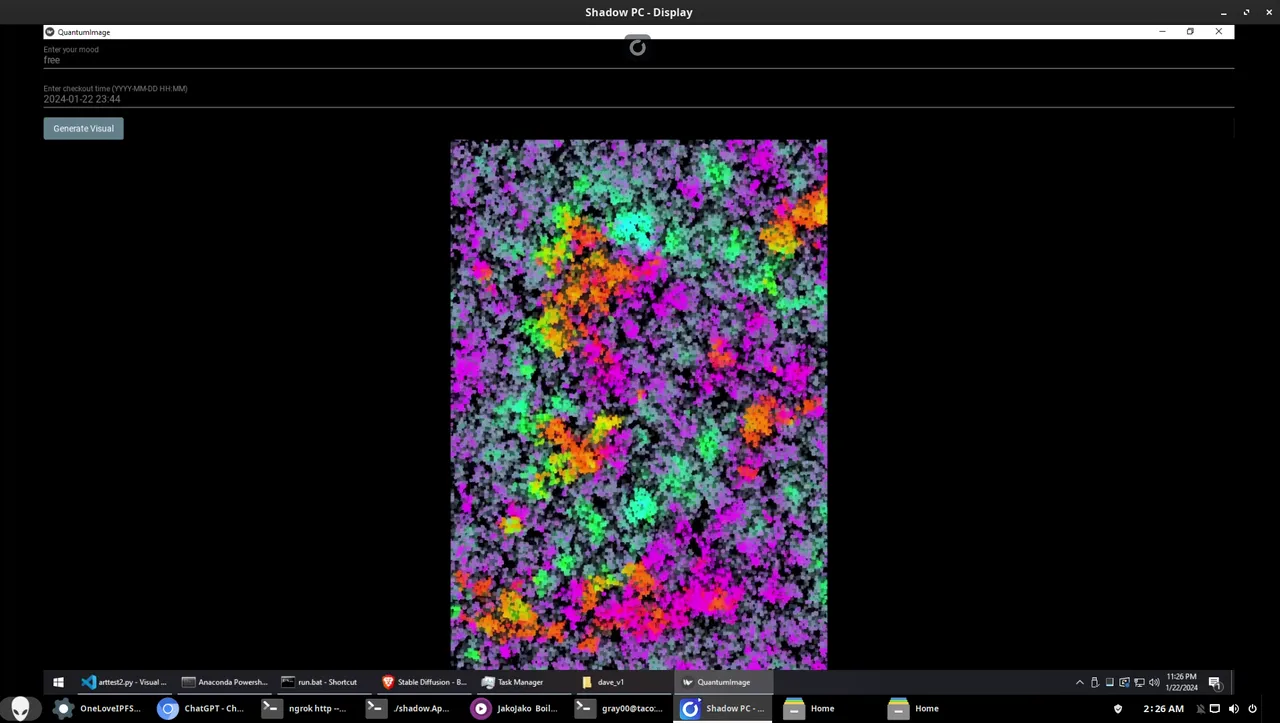

Imagine a communication platform, not unlike the ones we use today, but with an extraordinary ability. It changes its colors, not at random or by the user's explicit choice, but as a reflection of the user's emotional state. This capability is not born of mere fancy but is a direct application of the principles of quantum computing and color psychology, woven together with the threads of artificial intelligence.

The quantum circuit in your script, dear reader, is the heart of this system. It takes a simple input – a color code – and processes it through a series of quantum gates. These gates, much like the neurons in our brains, are interconnected in a complex web. They take the input and transform it, not in a linear, predictable way, but in a manner that is probabilistic and, in some ways, mirrors the complexity of human emotions themselves.

But how do we translate a user's emotional state into a color code? This is where the interface becomes a window not only to our words but to our inner selves. Users express their mood or emotional state through text – a simple message, a diary entry, a social media post. This text is then analyzed using advanced sentiment analysis algorithms, a branch of AI that has matured significantly in recent years. The output of this analysis is a mood indicator, which is then mapped to a specific color palette.

For instance, a reddish hue might indicate feelings of anger or frustration. A blue might suggest calmness or sadness. A bright yellow could signify happiness or excitement. These colors subtly change the interface's background, buttons, and even text. The user, often unknowingly influenced by these colors, receives a gentle, non-intrusive nudge about their emotional state. It's a form of feedback that is both subtle and profound.

But why embark on this journey? Why intertwine the cold logic of quantum circuits with the ephemeral nature of human emotions? The answer lies in our quest for better understanding – not just of the world around us, but of ourselves and our fellow human beings. In an age where digital communication often becomes a barrier to real understanding, ERCIs offer a bridge.

These interfaces could lead to heightened emotional awareness. When a user sees their interface turn a stormy shade of gray or a frustrated red, they might pause. They might reflect. In this moment of introspection, there's an opportunity for growth, for change. It's a chance to step back and consider our words and actions, to understand the emotional undercurrents that drive them.

Moreover, in a world where empathy often gets lost in the noise of digital chatter, ERCIs could serve as a reminder of our shared humanity. By making the emotional content of our communications more visible, these interfaces could foster deeper, more meaningful connections. They could help us see not just the words of another person, but the feelings behind those words.

Of course, this journey is not without its challenges. The accuracy of sentiment analysis, the privacy concerns of analyzing emotional content, the subjective nature of color perception – these are but a few of the hurdles we must overcome. But the potential rewards are immense. In understanding our emotions and those of others, we take a step closer to understanding the human condition itself.

Close

In the grand cosmos of human interaction, ERCIs are like a new telescope, allowing us to see not just the stars, but the vast, unexplored nebulae of human emotion. They offer a glimpse into a future where technology helps us not just to connect, but to understand – ourselves, and each other, a little better. In this new world, every message, every word, every pixel of color becomes a part of a larger conversation, a cosmic dance of human emotion and understanding.

As we stand on this precipice, looking out into the unknown, we are reminded of the words of Carl Sagan, "Somewhere, something incredible is waiting to be known." In the realm of Emotion-Responsive Communication Interfaces, that 'something incredible' is the deeper, more empathetic understanding of our own humanity.

import pennylane as qml

import numpy as np

import requests

import random

import sys

import base64

import httpx

import logging

import os

import json

from datetime import datetime

from kivymd.app import MDApp

from kivymd.uix.screen import MDScreen

from kivymd.uix.button import MDRaisedButton

from kivymd.uix.boxlayout import MDBoxLayout

from kivy.uix.boxlayout import BoxLayout

from kivy.uix.widget import Widget

from kivymd.uix.textfield import MDTextField

from kivy.uix.image import AsyncImage

from kivy.clock import Clock

from concurrent.futures import ThreadPoolExecutor

import asyncio

import base64

with open('configopenai.json', 'r') as f:

config = json.load(f)

openai_api_key = config['openai_api_key']

logging.basicConfig(level=logging.INFO)

num_qubits = 6

dev = qml.device('default.qubit', wires=num_qubits)

@qml.qnode(dev)

def quantum_circuit(color_code, datetime_factor):

r, g, b = [int(color_code[i:i+2], 16) for i in (1, 3, 5)]

r, g, b = r / 255.0, g / 255.0, b / 255.0

qml.RY(r * np.pi, wires=0)

qml.RY(g * np.pi, wires=1)

qml.RY(b * np.pi, wires=2)

qml.RY(datetime_factor * np.pi, wires=3)

qml.CNOT(wires=[0, 1])

qml.CNOT(wires=[1, 2])

qml.CNOT(wires=[2, 3])

return qml.state()

def mixed_state_to_color_code(mixed_state):

mixed_state = np.array(mixed_state)

probabilities = np.abs(mixed_state)**2

probabilities /= np.sum(probabilities)

r_prob = probabilities[:len(probabilities)//3]

g_prob = probabilities[len(probabilities)//3:2*len(probabilities)//3]

b_prob = probabilities[2*len(probabilities)//3:]

r = int(np.sum(r_prob) * 255)

g = int(np.sum(g_prob) * 255)

b = int(np.sum(b_prob) * 255)

return f'#{r:02x}{g:02x}{b:02x}'

class QuantumImageApp(MDApp):

def __init__(self, **kwargs):

super().__init__(**kwargs)

self.theme_cls.theme_style = "Dark"

self.theme_cls.primary_palette = "BlueGray"

self.root = MDScreen()

self.image_display = AsyncImage(source="")

self.create_gui()

def create_gui(self):

self.layout = MDBoxLayout(orientation="vertical", md_bg_color=[0, 0, 0, 1])

self.text_box = MDTextField(hint_text="Enter your mood", hint_text_color=[1, 1, 1, 1])

self.checkout_time_picker = MDTextField(hint_text="Enter checkout time (YYYY-MM-DD HH:MM)", hint_text_color=[1, 1, 1, 1])

run_button = MDRaisedButton(text="Generate Visual", on_press=self.generate_visual, text_color=[1, 1, 1, 1])

self.image_display = AsyncImage(source="", allow_stretch=True, keep_ratio=True)

self.image_display.size_hint_y = None

self.image_display.height = 0

self.layout.add_widget(self.text_box)

self.layout.add_widget(self.checkout_time_picker)

self.layout.add_widget(run_button)

self.layout.add_widget(self.image_display)

self.root.add_widget(self.layout)

def update_image(self, image_path):

if image_path and os.path.exists(image_path):

self.image_display.source = image_path

self.image_display.size_hint_y = 1

self.image_display.height = 200

else:

self.image_display.source = ""

self.image_display.size_hint_y = None

self.image_display.height = 0

def generate_visual(self, instance):

mood_text = self.text_box.text

checkout_time_str = self.checkout_time_picker.text

executor = ThreadPoolExecutor(max_workers=1)

future = executor.submit(

asyncio.run, self.process_mood_and_time(mood_text, checkout_time_str, mood_text)

)

future.add_done_callback(self.on_visual_generated)

def update_image(self, image_path):

if image_path and os.path.exists(image_path):

logging.info(f"Updating image display with {image_path}")

self.image_display.source = image_path

self.image_display.size_hint_y = 1

self.image_display.height = 200

else:

logging.error(f"Image file not found at {image_path}")

self.image_display.source = ""

self.image_display.size_hint_y = None

self.image_display.height = 0

def on_visual_generated(self, future):

try:

color_code, datetime_factor = future.result()

quantum_state = quantum_circuit(color_code, datetime_factor)

image_path = generate_image_from_quantum_data(quantum_state)

if image_path:

logging.info(f"Image path received: {image_path}")

Clock.schedule_once(lambda dt: self.update_image(image_path))

else:

logging.error("Image path not received")

except Exception as e:

logging.error(f"Error in visual generation: {e}")

async def process_mood_and_time(self, mood_text, checkout_time_str, user_mood):

try:

emotion_color_map = await self.generate_emotion_color_mapping(user_mood)

datetime_factor = self.calculate_datetime_factor(checkout_time_str)

async with httpx.AsyncClient() as client:

response = await client.post(

"https://api.openai.com/v1/chat/completions",

headers={"Authorization": f"Bearer {openai_api_key}"},

json={

"model": "gpt-4",

"messages": [

{"role": "system", "content": "Determine the sentiment of the following text."},

{"role": "user", "content": mood_text}

]

}

)

response.raise_for_status()

result = response.json()

if result is not None and 'choices' in result and len(result['choices']) > 0:

sentiment = self.interpret_gpt4_sentiment(result)

return emotion_color_map.get(sentiment, "#808080"), datetime_factor

else:

logging.error("Invalid response structure from GPT-4")

return "#808080", 1

except Exception as e:

logging.error(f"Error in mood and time processing: {e}")

return "#808080", 1

def calculate_datetime_factor(self, checkout_time_str):

try:

checkout_time = datetime.strptime(checkout_time_str, "%Y-%m-%d %H:%M")

now = datetime.now()

time_diff = (checkout_time - now).total_seconds()

return max(0, 1 - time_diff / (24 * 3600))

except Exception as e:

logging.error(f"Error in calculating datetime factor: {e}")

return 1

async def generate_emotion_color_mapping(self, user_mood):

prompt = (

f"The user's current mood is '{user_mood}'. Based on this, "

"create a detailed mapping of emotions to specific colors, "

"considering how colors can influence mood and perception. "

"The mapping should be in a clear, list format. "

"For example:\n"

"[example]\n"

"happy: #FFFF00 (bright yellow),\n"

"sad: #0000FF (blue),\n"

"excited: #FF4500 (orange red),\n"

"angry: #FF0000 (red),\n"

"calm: #00FFFF (cyan),\n"

"neutral: #808080 (gray)\n"

"[/example]\n"

"Now, based on the mood '{user_mood}', provide a similar mapping."

)

try:

async with httpx.AsyncClient() as client:

response = await client.post(

"https://api.openai.com/v1/chat/completions",

headers={"Authorization": f"Bearer {openai_api_key}"},

json={

"model": "gpt-4",

"messages": [{"role": "system", "content": prompt}]

}

)

response.raise_for_status()

result = response.json()

logging.debug(f"GPT-4 response for emotion-color mapping: {result}")

return self.parse_emotion_color_mapping(result)

except httpx.HTTPStatusError as e:

logging.error(f"HTTP error occurred: {e.response.status_code}")

except httpx.RequestError as e:

logging.error(f"An error occurred while requesting: {e}")

except Exception as e:

logging.error(f"Error in generating emotion-color mapping: {e}")

return {}

def parse_emotion_color_mapping(self, gpt4_response):

try:

response_text = gpt4_response['choices'][0]['message']['content']

mapping_text = response_text.split("[example]")[1].split("[/example]")[0].strip()

emotion_color_map = {}

for line in mapping_text.split(',\n'):

if line.strip():

emotion, color = line.split(':')

emotion_color_map[emotion.strip().lower()] = color.strip().split(' ')[0]

return emotion_color_map

except Exception as e:

logging.error(f"Error in parsing emotion-color mapping: {e}")

return {}

def interpret_gpt4_sentiment(self, gpt4_response):

try:

response_text = gpt4_response['choices'][0]['message']['content'].lower()

if "positive" in response_text:

return "happy"

elif "negative" in response_text:

return "sad"

else:

return "neutral"

except Exception as e:

logging.error(f"Error in interpreting sentiment: {e}")

return "neutral"

def generate_image_from_quantum_data(quantum_state):

try:

color_code = mixed_state_to_color_code(quantum_state)

prompt = f"Generate an image with predominant color {color_code}"

url = 'http://127.0.0.1:7860/sdapi/v1/txt2img'

payload = {

"prompt": prompt,

"steps": 121,

"seed": random.randrange(sys.maxsize),

"enable_hr": "false",

"denoising_strength": "0.7",

"cfg_scale": "7",

"width": 966,

"height": 1356,

"restore_faces": "true",

}

response = requests.post(url, json=payload)

response.raise_for_status()

r = response.json()

if 'images' in r and r['images']:

base64_data = r['images'][0]

image_bytes = base64.b64decode(base64_data)

image_path = f"output_{random.randint(0, 10000)}.png"

with open(image_path, "wb") as image_file:

image_file.write(image_bytes)

logging.info(f"Image saved to {image_path}")

return image_path

else:

logging.error("No images found in the response")

return None

except Exception as e:

logging.error(f"Error in image generation: {e}")

return None

if __name__ == "__main__":

app = QuantumImageApp()

app.run()

Sources

"Towards Emotion-Sensitive Conversational User Interfaces in Healthcare Applications" - This research paper discusses the use of sentiment analysis and natural language processing to develop emotion-sensitive conversational user interfaces in healthcare applications. It emphasizes the importance of understanding and responding to user emotions in order to improve user experience and engagement Source.

"Using Natural Language Processing and Sentiment Analysis to Augment Traditional User-Centered Design: Development and Usability Study" - This study uses natural language processing and sentiment analysis to enhance traditional user-centered design. It provides insights into how these techniques can be used to better understand and respond to user emotions Source.

"Could Quantum Mechanics Explain Human Behavior?" - This article explores the possibility of quantum mechanics explaining human behavior. While it does not specifically address ERCIs, it does highlight the potential of quantum computing in understanding and manipulating complex systems like human emotions Source.

"Conceptual Framework for Quantum Affective Computing" - This research paper presents a conceptual framework for quantum affective computing, a field that studies the application of quantum computing methods to affective computing, which involves the computation of human emotions. It provides a theoretical basis for the development of ERCIs Source.

"Brains, Quantum Mechanics, and the Mystery of Consciousness" - This article discusses the relationship between the brain, quantum mechanics, and consciousness. It provides a broader context for understanding how quantum computing might contribute to our understanding of human emotions Source.