The classic trolley problem goes like this: You see a runaway trolley speeding down the tracks, about to hit and kill five people. You have access to a lever that could switch the trolley to a different track, where a different person would meet an untimely demise. Should you pull the lever and end one life to spare five?

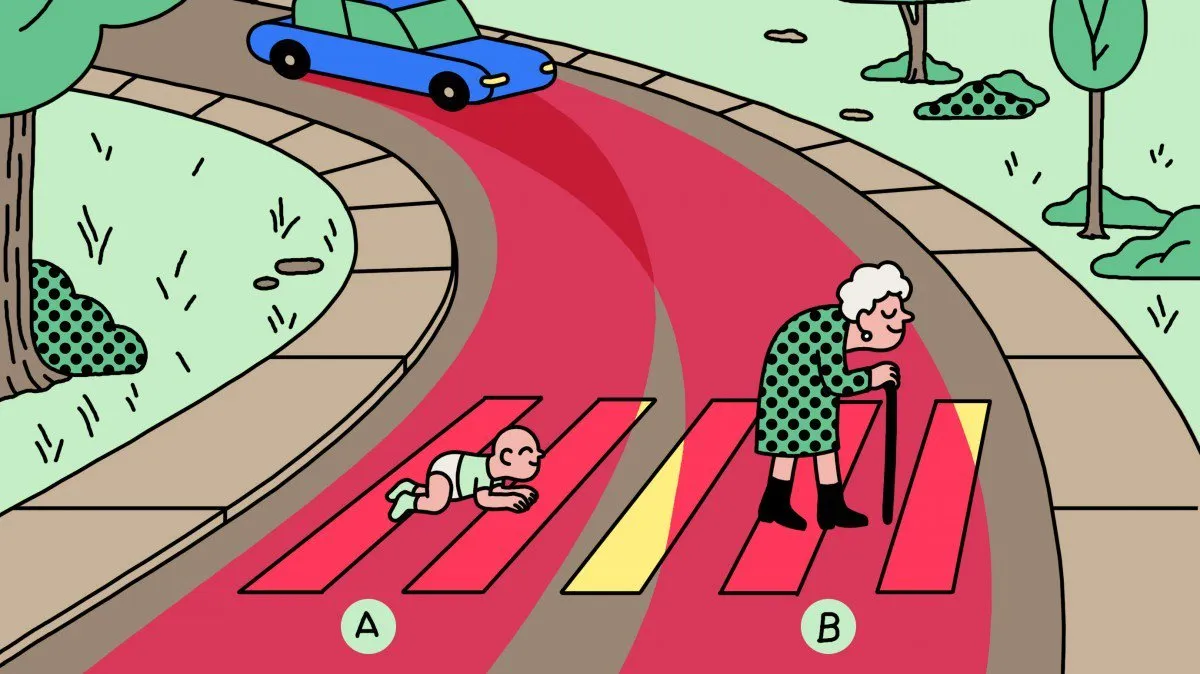

The Moral Machine took that idea to test nine different comparisons shown to polarize people: should a self-driving car prioritize humans over pets, passengers over pedestrians, more lives over fewer, women over men, young over old, fit over sickly, higher social status over lower, law-abiders over law-benders? And finally, should the car swerve (take action) or stay on course (inaction)?

Rather than pose one-to-one comparisons, however, the experiment presented participants with various combinations, such as whether a self-driving car should continue straight ahead to kill three elderly pedestrians or swerve into a barricade to kill three youthful passengers.

The researchers found that countries’ preferences differ widely, but they also correlate highly with culture and economics.

Source of shared Link