Docker Machine, Compose, and Swarm

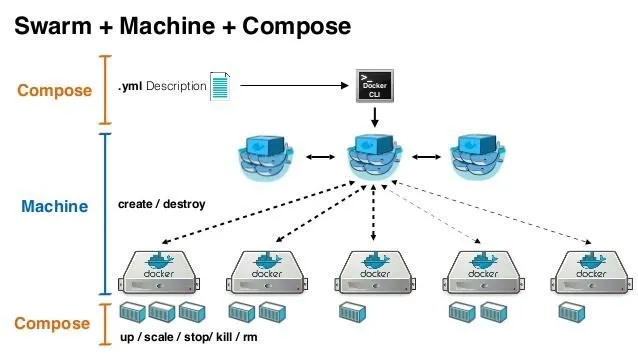

I'm in the process of setting up and testing a Docker Swarm using Docker Compose 3.0. I'm using this stack to test with because it's a multi-container setup I'm familiar with.

The stack is written in docker-compose 2 so it has to be adjusted for version 3. A good sample case to work through as the situation is similar for most of our stacks.

I had no problem deploying with docker swarm commands directly, after creating an overlay network and adding a node to it. The next step is moving the network to be defined in the docker-compose.yml file. With this I had trouble because the compose file failed to see the network. I think I've found the solution to that here.

I haven't had a chance to test yet because I'm also in the process of starting to use docker-machine for the creation of containers rather than manually adding them to my swarms. I'm thinking to create a server which will host a docker-machine & docker manager, with Digital Ocean and AWS credentials setup.

That way people with an authorized ssh key can access the server for management purposes.

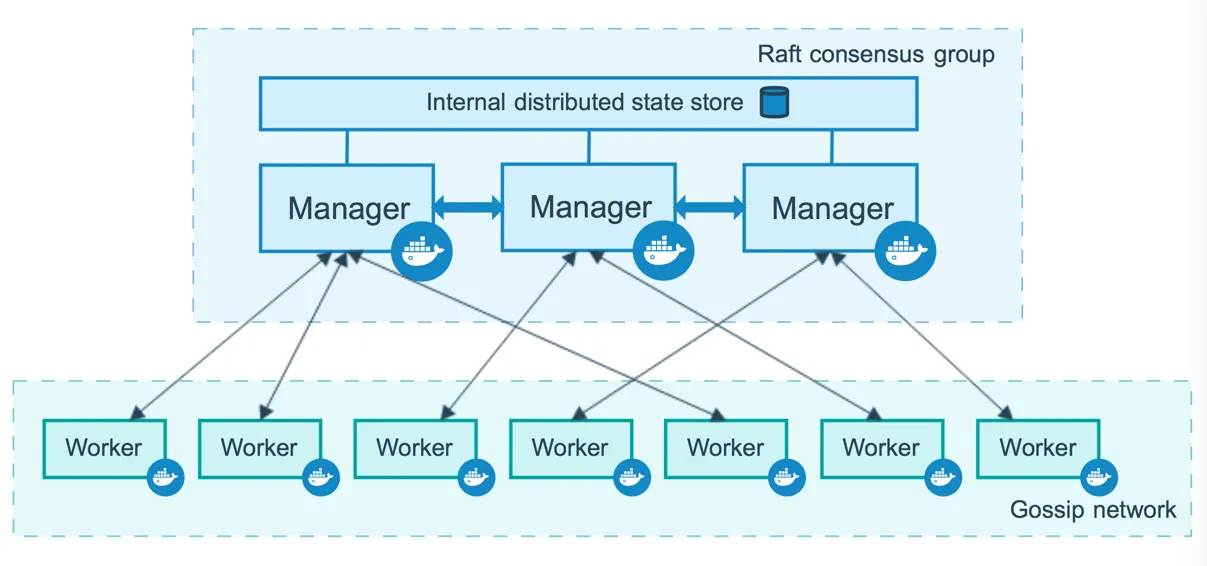

We'll probably want multiple docker manager nodes for each stack, but I'm thinking we only need one instance of docker-machine to manage everything. Having multiple docker-managers is considered a high-availability setup, HA for short.

Notes

The rest journal is basically me dumping my notes from today.

DevOps - Deploying to Docker Swarm with Compose 3.0

- Docker Swarm Visualizer - An open-source tool for viewing your swarm.

High Availability (HA)

Volumes

I have to look more into attaching volumes. I know in the past I've run EC2 instances without setting up a volume.

When I used all the storage it automatically alocated more at a premium price. By attaching my own volume purchased ahead of time the cost was less than 10%. Can't remember the details of why that was right now. It might have been that the storage I attached was HDD instead of SSD

Though I don't plan to store any large files or content on the docker images themselves.

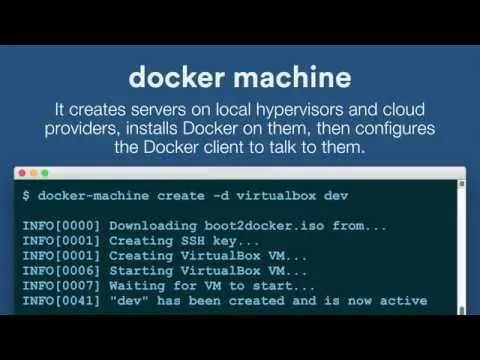

Docker Machine

AWS VPC Required

This section is only applicable if you're using docker-machine to create EC2 instances.

You have to have VPC on Amazon setup to use Docker Machine with EC2. I thought this was a problem for me based on an error message but the actual problem was in the IAM configuration for the AWS credentials I was using.

Make sure the credentials have authorization for your VPC and EC2. Not having a default VPC probably isn't your problem unless you explicitly deleted the one Amazon creates for you by default.

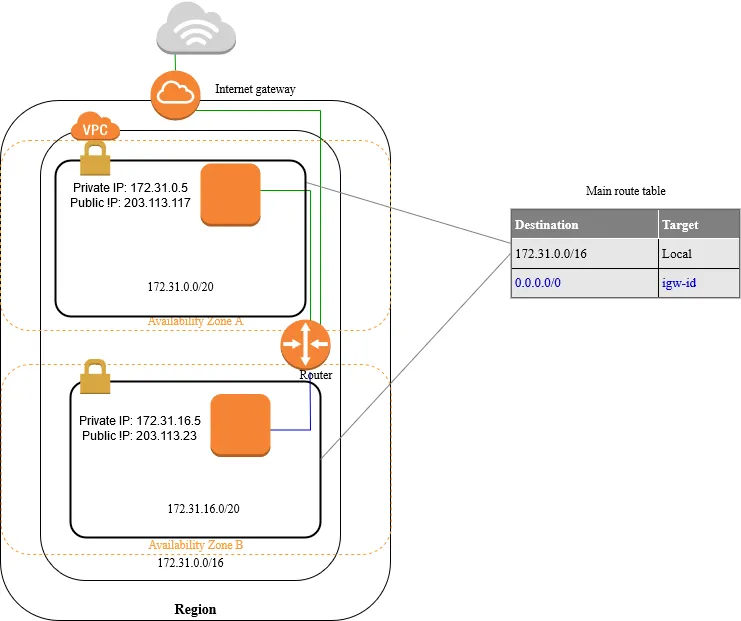

VPC = Virtual Private Cloud

When we create a default VPC, we do the following to set it up for you:

- Create a VPC with a size /16 IPv4 CIDR block (172.31.0.0/16). This provides up to 65,536 private IPv4 addresses.

- Create a size /20 default subnet in each Availability Zone. This provides up to 4,096 addresses per subnet, a few of which are reserved for our use.

- Create an internet gateway and connect it to your default VPC.

- Create a main route table for your default VPC with a rule that sends all IPv4 traffic destined for the internet to the internet gateway.

- Create a default security group and associate it with your default VPC.

- Create a default network access control list (ACL) and associate it with your default VPC.

- Associate the default DHCP options set for your AWS account with your default VPC.

VPC Diagram