Sifting through the bullshit so that you don't have to...

Today information has been circulating that China has invented a new kind of hard-drive that's x100000 times faster than traditional ones. A bold claim, to be sure, but is it accurate? Of course not. So for the last hour I've been looking into this thing to see what's real and what isn't.

https://x.com/Truthtellerftm/status/1917837808699420839

https://x.com/newslisted/status/1917890536306020824

Grossly misrepresented.

Right off the bat all the pictures shown are just some generic scientist with gloves on holding what is very obviously a CPU chip. Poxiao is a hard drive... not a CPU, so the very first foot forward is a complete fabrication. That is not what it looks like, in fact all the descriptions of the product reiterate that it is the size of a "grain of rice". Does that look like a grain of rice? No. Stupid.

25 BILLION BITS OF DATA OMG

I absolutely fucking despise the unit bias that these assholes use to measure tech. First, it started by inflating the definition of a megabyte. A megabyte is 2^20, that's two to the twentieth power. Because that's how computers work (binary base-2 math). Some genius realized that they could inflate the number because technically on the legal level MEGA means a million. So now instead of 1,048,576 bytes in a megabyte they can claim there are only 1,000,000 bytes in a megabyte. Two identical products can look different when marketed, and because people don't know better many of them will just buy the one with the bigger number. Capitalism.

But then BITS took this absurdity to the maximum.

Because everything is measured in BYTES, but some "genius" salesman realized hey we can make the number x8 times bigger by saying megabits per second instead of megabytes like everyone else. Fucking... bastards... so now everyone has to use this idiotic megabits measurement and then divide it by 8 for megabytes to actually get a number that makes sense. Internet speed could be 300 megabits but then you realize that's only 37.5 megabytes after dividing by 8. Stupid.

So how fast is this garbage?

25 BILLION BITS OF DATA OMG!!!

Okay so we take 25,000,000,000 and divide it by 8 to get bytes. That's 3,125,000,000 bytes per second. Divide that by 1024 to get kilobytes/sec. 3,051,757.8. Divide that by 1024 again to get megabytes/sec. 2,980.2. Divide that by 1024 to get gigabytes/sec.

2.91 Gigabytes per second

What a minute... that's not even that fast. There are NVME hard-drives that are already faster than this right now, and they are production grade assets rather than a prototype. This new technology we are talking about might only be the size of a grain of rice, but it also only currently stores 1 KB of data. So what gives? Why would anyone be excited about this tech or make ridiculously lofty claims like it's x100000 times faster than the leading competitor? Is it all just bullshit?

Of course the conspiracy theorists try to come out of the woodwork like, "This is just like when China launched Deepseek; they are trying to crash the economy again!" lol. no. shut up.

https://x.com/KEmperor2627/status/1913172448448823807

Oh well would you look at that.

This is not the world's fastest hard drive technology after all; it's the world's theoretically fastest flash-memory... which sure, is a type of hard drive I guess, but was never the fastest type of hard drive to begin with, so it's not like this tech is going to make servers for example more efficient unless the cost to make the finished product is superior to something like NVMe. It's cool that we could have a really fast hard drive like this that can connect and operate through a USB port, but is it really an upgrade? I had to ask AI a lot of questions to get the answers I was looking for.

x100000 faster?

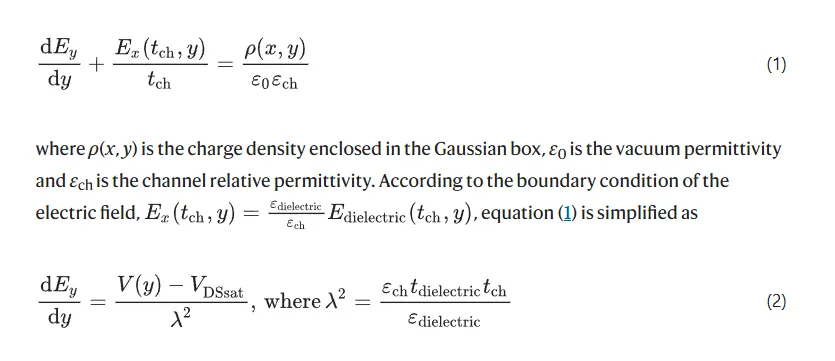

The first bit of confusion hit me when I realized that this thing has an access time of 400 picoseconds. If we take a trillion picoseconds/second and divide by 400 picoseconds we get 2.5B bits per second... not 25B bits per second. So already it seems like the basic math is off by a factor of 10 in the direction of pretending like it's faster than it actually is.

I asked AI about this and it told me not to worry, and that the 400 picoseconds isn't the amount of time it takes to do 1 bit, but rather the amount of time it takes for one cycle... and it can do 10 bits per cycle apparently, making sense of the discrepancy.

However, AI was also quick to point out that NVMe drives derive a lot of their speed from parallelism. For example, without the ability to transfer data across wide bus lines an NVMe drive might be x50 times slower. Which means if this new flash technology was able to scale up using parallelism within a production-grade environment it might be blazing fast and a huge upgrade. Of course we have no idea if that's possible, and if it is we have no idea what the cost would be.

Regardless of that AI is also quick to point out that the lightning-speed response times of this new technology could make it superior for certain applications that require extremely low latency. For example real-time life-saving technology or hyper-competitive day trading; things of that nature where contact switches are a big deal and it's more important to get a small task done extremely quickly rather than trying to tackle a big batch of operations. So I found that interesting...

https://www.nature.com/articles/s41586-025-08839-w

AI also mentioned this Nature article several times when citing information, so I decided to take a look at it.

Oh... hell no

It's a highly dense research paper on the subject that would probably take me 40 hours of work to actually understand. No thanks. lol. I think we can skip that step of this journey. Think I'll stick to the gist of it, thanks.

Blurring the line between memory and computing

This is also an interesting statement to make because it implies that this new technology could potentially be used as substitute for RAM... which means it would have the advantage of being blazing fast like RAM but also the data would persist after the power gets turned off. Apparently this could be yet another piece of hardware that makes AI more efficient...

Poxiao’s speed and design could enable this better than NVMe drives, reducing the need to shuttle data between storage and RAM, which is a bottleneck in current systems.

It would also just be super badass to have a computer with persistent RAM. That would mean you could just unplug your computer at any time, and then when you plug it back in it would just boot up to right where you left off because none of the RAM information was lost from the power-off.

Something like this would also completely eliminate the threat of power outages that can occur when RAM data gets wiped. If you're as old as me you surely remember that old versions of Windows operating systems would get completely wrecked if you just pulled the plug on them without a proper shutdown. These days there are all kinds of failsafe measures in place to mitigate these problems from happening, but perhaps this new flash technology will one day make these failsafe mechanics redundant and no longer necessary.

Conclusion

Did China just invent the world's fastest hard drive technology? In theory, maybe they did, but in practice it has a long long LONG way to go before it actually works here within the concrete jungle of the internet. This is a technology that has very high potential if it can scale up cheaply, but could also turn out to be a complete nothing-burger if technicalities prevent that from happening or it's too impractical/expensive to operate.