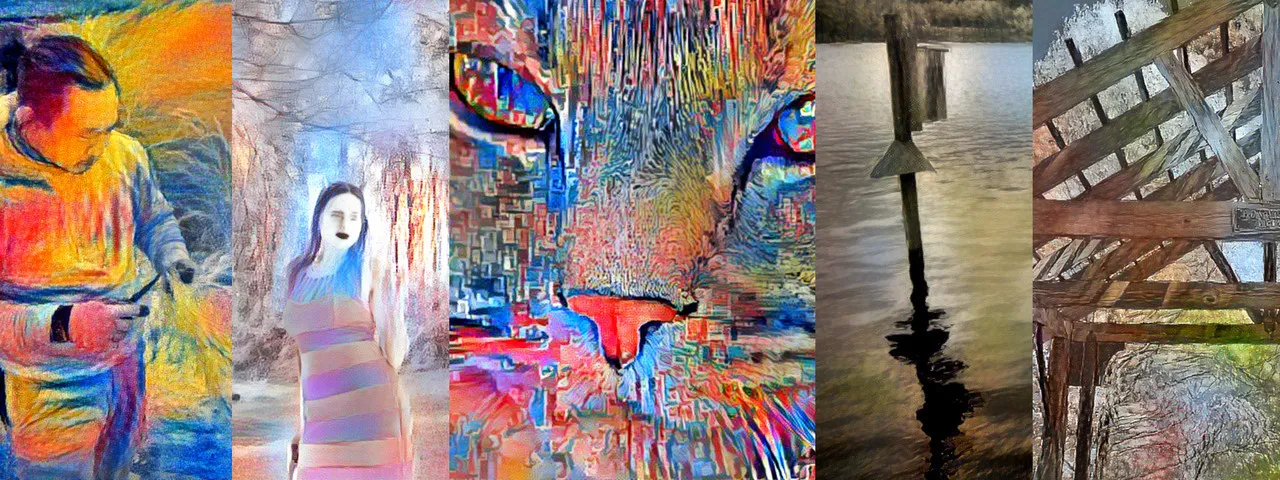

Using images I have captured in the ultraviolet and infrared spectrums, I pass them through my video cards to create artwork out of them. Adding colors, shapes and stylings otherwise that would not show up in the image. If you have heard of "deep dreaming" it is using a similar technology to make these renderings.

Out of many I rendered, I only kept a few that I would like to "tokenize" on nftshowroom. A dapp on the Hive blockchain that helps fill the gap of artists and buyers of art. By uploading my images I can list them for Hive. Posting these images last year I got alot of good attention from it so I figured this would be a great time to show off some of my new art and to start a portfolio.

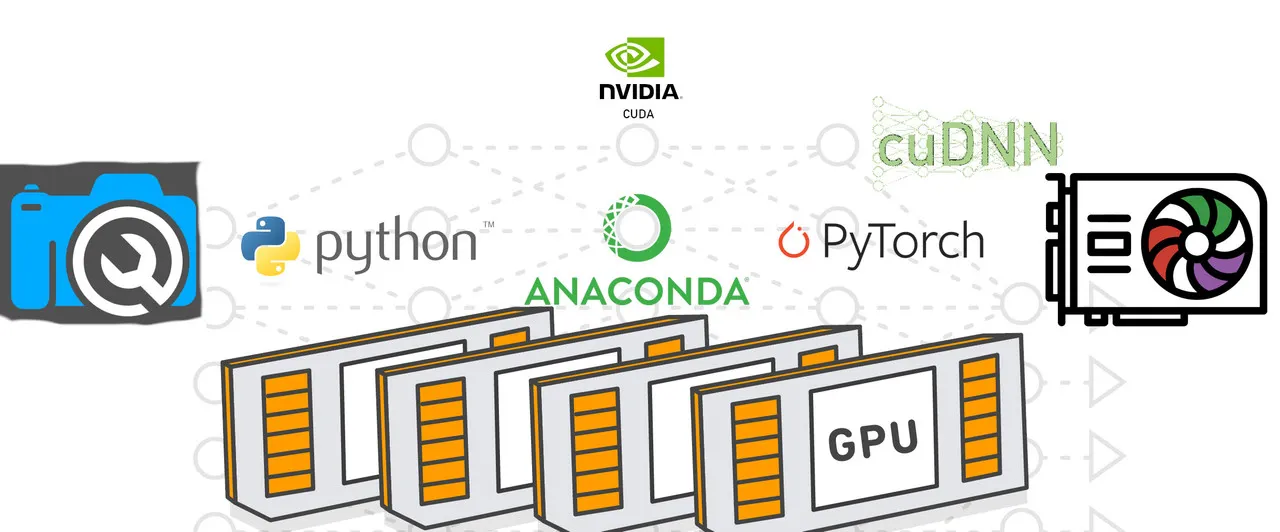

Using an array of physical tools, such as a modified camera that can see past the visual wavelength of light. And using layers of software such as Anaconda, Pytorch, cuDNN all running in a Python environment. These tools combined gives the art I create a surreal and sometimes overly vivid appearance to it.

Check out artwork on nftshowroom.com

Its powered by Hive

I will do a post for each piece of art I release. Showing the infrared or ultraviolet image I used as a base, and then the artwork that helped stylize the final piece of art that comes out after running through a deep convolutional network.

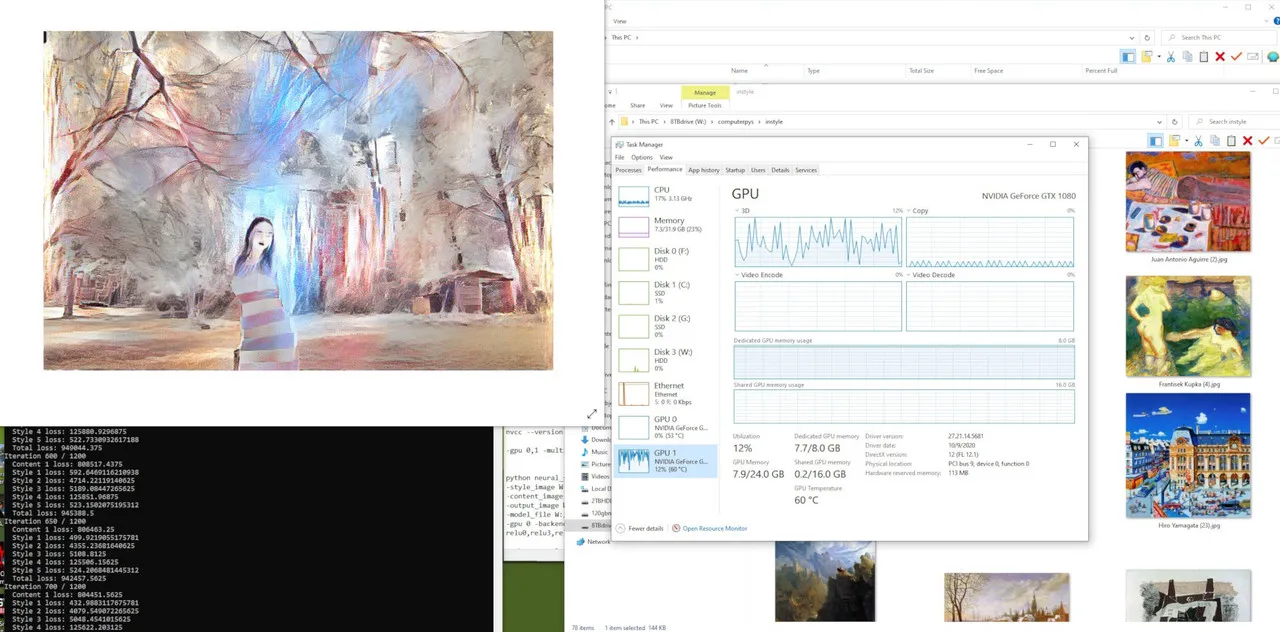

To the left are images of different scenes that have been rendered by computer vision to get whole new look to the image. And to the right is showing how different the results can be on a single image depending on the settings and style artwork supplied to the network.

A peak at how this environment works, I can render up to about 2000x1500 pixels with my two Nvidia 1080s graphics cards using a feature called "Multi-Device Strategy". This splits up the different layers between video cards to allow for larger workloads. Just about all my video memory is used, when combined its 12GB of video ram being consumed and almost much system memory as well.

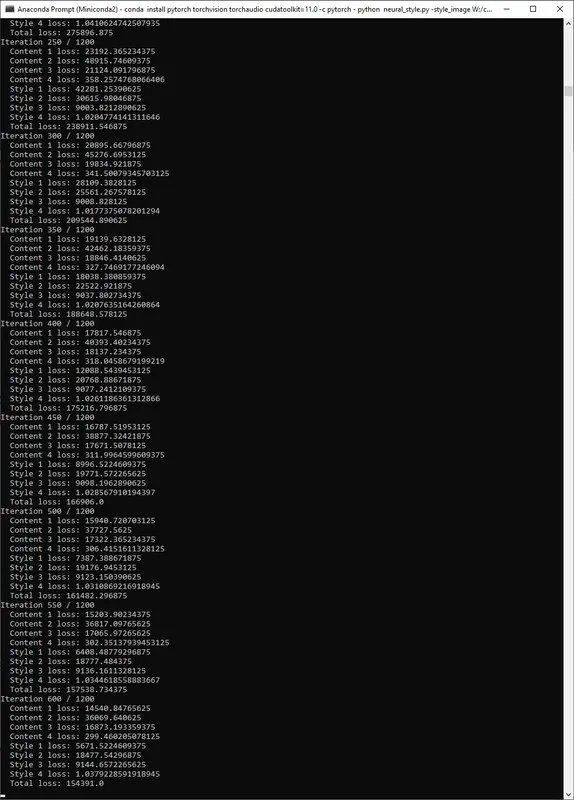

So this art is quite process intensive to make, but well worth the results when using the right hardware. Takes my computer about 30 minutes to make 10 iterations which then I decide which one I think came out the best and save it for the showcase.

https://github.com/ProGamerGov/neural-style-pt

More info on the python script I use

All of this runs in a miniconda shell, which has the needed dependences already installed

Next posts about this will be of each art piece. And maybe a more in depth guide on how I do this graphics accelerated art if others would like to see how its all done.