Neural Network is a tool which is used in Deep Learning. This tool uses a combination of linear and non-linear functions to make highly accurate predictions. For training our model, this combination of functions is used multiple times to cycle through the data. It can be used for predictions on regression or classification problems.

How we utilize the available data will depend on whether we are solving a regression or classification problem.

Here our data consists of images and our task is to make a neural network to identify these images.

import torch

import torchvision

import numpy as np

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

from torchvision.datasets import CIFAR10

from torchvision.transforms import ToTensor

from torchvision.utils import make_grid

from torch.utils.data.dataloader import DataLoader

from torch.utils.data import random_split

%matplotlib inline

# Project name used for jovian.commit

project_name = '03-cifar10-feedforward'

dataset = CIFAR10(root='data/', download=True, transform=ToTensor())

test_dataset = CIFAR10(root='data/', train=False, transform=ToTensor())

dataset_size = len(dataset)

dataset_size

test_dataset_size = len(test_dataset)

test_dataset_size

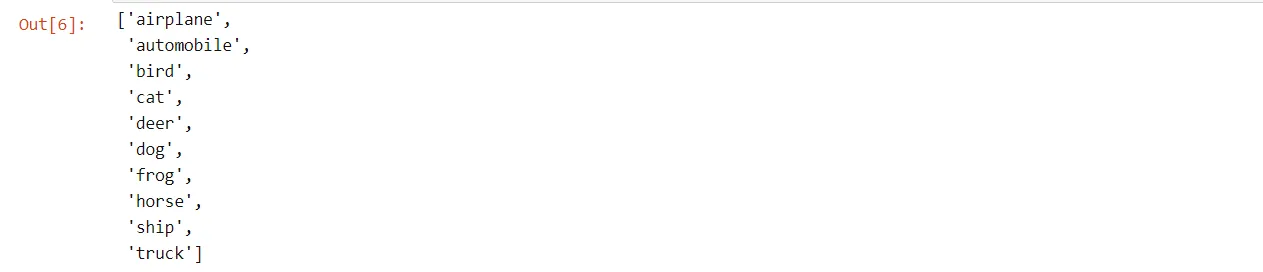

classes = dataset.classes

classes

img, label = dataset[0]

img_shape = img.shape

img_shape

a = {}

for image,label in dataset:

num_label = classes[label]

if num_label not in a:

a[num_label] = 0

a[num_label] += 1

a

We begin by importing the PyTorch packages and modules. Here we need to download the CIFAR-10 dataset. We loop through the data to determine the number of images for each object (class).

torch.manual_seed(43)

val_size = 5000

train_size = len(dataset) - val_size

train_ds, val_ds = random_split(dataset, [train_size, val_size])

len(train_ds), len(val_ds)

batch_size=128

train_loader = DataLoader(train_ds, batch_size, shuffle=True, num_workers=4, pin_memory=True)

val_loader = DataLoader(val_ds, batch_size*2, num_workers=4, pin_memory=True)

test_loader = DataLoader(test_dataset, batch_size*2, num_workers=4, pin_memory=True)

In this step, we split the data and then create DataLoader for batches of data. We have written a function to predict accuracy.

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))

class ImageClassificationBase(nn.Module):

def training_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

return loss

def validation_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

acc = accuracy(out, labels) # Calculate accuracy

return {'val_loss': loss.detach(), 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_losses).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # Combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], val_loss: {:.4f}, val_acc: {:.4f}".format(epoch, result['val_loss'], result['val_acc']))

def evaluate(model, val_loader):

outputs = [model.validation_step(batch) for batch in val_loader]

return model.validation_epoch_end(outputs)

def fit(epochs, lr, model, train_loader, val_loader, opt_func=torch.optim.SGD):

history = []

optimizer = opt_func(model.parameters(), lr)

for epoch in range(epochs):

# Training Phase

for batch in train_loader:

loss = model.training_step(batch)

loss.backward()

optimizer.step()

optimizer.zero_grad()

# Validation phase

result = evaluate(model, val_loader)

model.epoch_end(epoch, result)

history.append(result)

return history

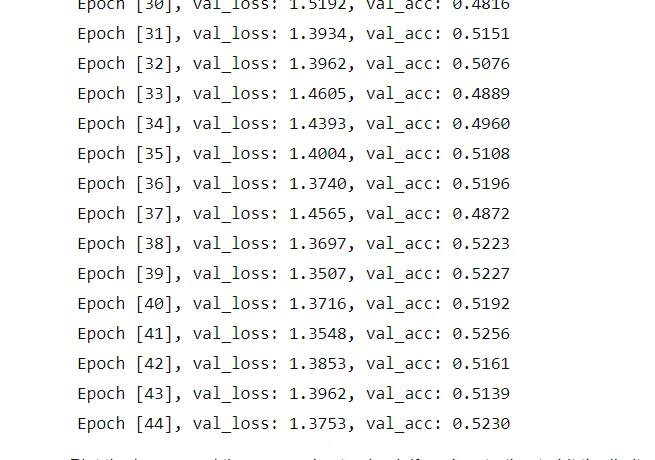

For this step, we begin training our model. Here we have written functions to make predictions and evaluate the accuracy.

torch.cuda.is_available()

def get_default_device():

"""Pick GPU if available, else CPU"""

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

device = get_default_device()

device

def to_device(data, device):

"""Move tensor(s) to chosen device"""

if isinstance(data, (list,tuple)):

return [to_device(x, device) for x in data]

return data.to(device, non_blocking=True)

class DeviceDataLoader():

"""Wrap a dataloader to move data to a device"""

def __init__(self, dl, device):

self.dl = dl

self.device = device

def __iter__(self):

"""Yield a batch of data after moving it to device"""

for b in self.dl:

yield to_device(b, self.device)

def __len__(self):

"""Number of batches"""

return len(self.dl)

train_loader = DeviceDataLoader(train_loader, device)

val_loader = DeviceDataLoader(val_loader, device)

test_loader = DeviceDataLoader(test_loader, device)

input_size = 3*32*32

output_size = 10

class CIFAR10Model(ImageClassificationBase):

def __init__(self):

super().__init__()

self.linear1 = nn.Linear(input_size, 1792)

self.linear2 = nn.Linear(1792, 896)

self.linear3 = nn.Linear(896, 448)

self.linear4 = nn.Linear(448, output_size)

def forward(self, xb):

# Flatten images into vectors

out = xb.view(xb.size(0), -1)

# Apply layers & activation functions

out = self.linear1(out)

out = F.relu(out)

out = self.linear2(out)

out = F.relu(out)

out = self.linear3(out)

out = F.relu(out)

out = self.linear4(out)

return out

Neural Network models need a Graphics Processing Unit (GPU) for making predictions.Therefore, we have written functions to use the GPU. We have also added different linear and non-linear (activation) functions to our model.

model = to_device(CIFAR10Model(), device)

history += fit(45, 0.004, model, train_loader, val_loader)

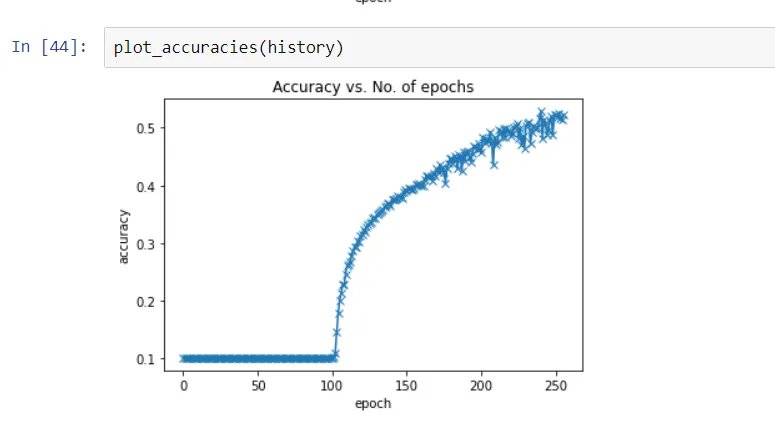

This step helped in predicting the accuracy of our model. This model achieved about 52% accuracy. To view my Jupyter notebook click here Next week, we will learn how to increase accuracy!

References

Deep Learning with PyTorch:Zero to GAN's* by Aakash N S

But what is Neural Network? By 3Blue1Brown

CIFAR-10 Dataset