Ethical Use of AI: The Hive Perspective

Alright, I think this discussion is necessary due to the recent proliferation of AI content at Hive. I am writing this post to create awareness, discussions, and do a little bit of soul searching regarding which direction we like to lead this decentralized platform of ours in terms of content. I am no expert on AI (Artificial Intelligence), but I have done a fair bit of reading on the subject and try to stay on top of the recent trends. At my day job, I sit on multiple technical committees regarding how to implement AI in geosciences and corporate intelligence. These are typically with product champions of Microsoft and Google, and I lead multiple scientific consortia on the use of AI in the Energy space. So with that out of the way, let us talk about our own personal space: Hive

Before I get into the details I like to make a few terminologies clear for people who are either less experienced or from slightly different background, so that we can speak the same language.

At this post, I am going to talking about generative AI and its ethical usage. So what is generative AI?

A type of artificial intelligence (AI) capable of creating original work. It is a portion of our larger AI toolbox.

Produces new content – text, code, audio or images – based on patterns learned from a given dataset

Predicts what follows – auto-complete for everything

There are numerous companies and tools current in use in the public domain, and hive

- Text based chat bots: Gemini, Co pilot, Chat GPT etc

- Text to code chat bot: Codex

- Text to voice bot: Lovo

- Text to reseach and podcast: notebook.lm, Chat GPT Deep Research

- Text to image: DALL-E, Grok, Bing Image Creator, Mid Journey

Each of these tools are already being used at hive. I have used almost all of them to various capacities. Also this is not an extensive list by any means. The list is very long.

What we want to accomplish as a human race is use a technology responsibly. This is especially true if a technology is new and disreuptive. A responsible AI usage refers to ethical, lawful and human-centric use of AI technologies. Why? Because hive is a social network. A social network thrives on human to human interactions. This is absolutely critical for survival of any social network. Trouble is since Hive is decentralized, the governance of something this complex is still community driven. Since we do not have a direct governing body which can provide certain boundary conditions, it is up to the community to create some. If we do not, there will be nothing to save, soon! I made that infographic above, please go through it and see which one is most impactful to day to day interactions at hive.

Couple of movements

There is a battle of ideology going on in the AI world. There are two opposing forces:

- Effective Accelerationism or e/acc;

- Effective Altruism

There are volumes of material on both of these topics and I can't even pretend to discuss even a small percentage of it. However, let me refer a few key articles/institutes on either side.

If you haven't read Marc Andreessen's The Techno-Optimist Manifesto, you must do it right now! No discussion of Effective Accelerationism will be complete without it. The writing is catchy, but the implications can be deep and long lasting. Many people in the current US administration, including Elon Musk, is a big proponent of this movement.

On the other side of the battle, the front is weak, especially after the recent US election results. However, the folks at the Center for AI safety are well funded, smart, and giving them a good fight. At the forefront of this battle is Dan Hendrycks, who is the director of Center for AI safety, and one of the designers of Humanity's Last Exam. This exam, they claim, is the hardest test ever administered to AI systems.

The questions on Humanity’s Last Exam went through a two-step filtering process. First, submitted questions were given to leading A.I. models to solve.

If the models couldn’t answer them (or if, in the case of multiple-choice questions, the models did worse than by random guessing), the questions were given to a set of human reviewers, who refined them and verified the correct answers. Experts who wrote top-rated questions were paid between $500 and $5,000 per question, as well as receiving credit for contributing to the exam.

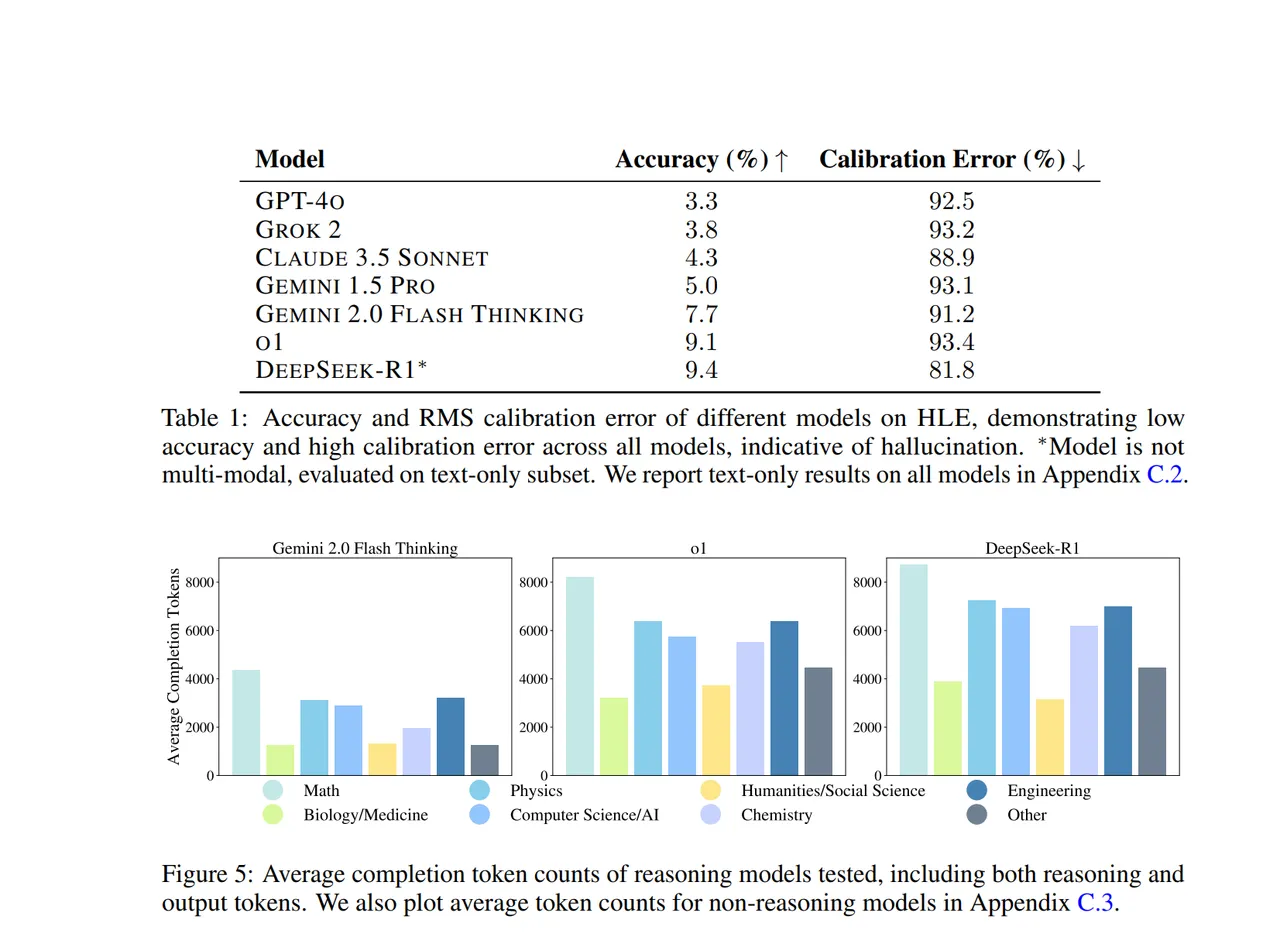

These are questions you might ask a PhD student. However, this is PhD level question is all the basic sciences and technology at the same time. These were asked/administered to six leading AI models. Here is the result from the paper I linked above, Humanity's Last Exam:

I don't want you to feel satisfied with less than ideal scores for these chatbots, as they are incredibly difficult question for most people. The prediction is by middle of this year, the models will be able to score above 50% on this test.

Here is one question is Physics (page 5 of the paper lists several sample questions):

A block is placed on a horizontal rail, along which it can slide frictionlessly. It is attached to the end of a rigid, massless rod of length R. A mass is attached at the other end. Both objects have weight W. The system is initially stationary, with the mass directly above the block. The mass is given an infinitesimal push, parallel to the rail. Assume the system is designed so that the rod can rotate through a full 360 degrees without interruption. When the rod is horizontal, it carries tension T1. When the rod is vertical again, with the mass directly below the block, it carries tension T2. (Both these quantities could be negative, which would indicate that the rod is in compression.) What is the value of (T1−T2)/W?

No, I can't answer it :) Not without any research. But I don't have a PhD in Physics. @lemouth ? Can you?

I am just introducing the two movements here, not forcing you to be in one camp or other. However, I can tell you which camp, I am in. You probably have figured it from my title already. I am in the 2nd camp. I believe AI requires checks and balances, otherwise it might lead to something that we can't turn it around soon enough. No I am not thinking Skynet, that happens in the movie; the reality will be a slow and steady death of social order and we don't need nukes for that.

Turning it back to Hive

Recently I have been mentioning a random bit of AI related spam at hive:

- An AI auto-comment bot, which made 330K comments and counting (nuked)

- An automated ai post of trending authors, rewards are distributed to authors (why? why needed?)

- An automated comment bot, posting comments for rewards (nuked by the community)

A comment by @gabrielatravels on my post and my answer alarmed me a lot recently.

@gabrielatravels/re-azircon-srie35

With the proliferation of AI agents, one can simply put anyones account content, simply by pointing a link to notebook.lm and make a decent summary. The first reviews of Deep Research by OpenAI Chat GPT (paid version) is very good.

A large part of my academic research was spend on research. In fact when you open any masters and/or PhD thesis, a significant portion of it is typically dedicated to 'previous work'! Deep research can do it in minutes. I have seen the quality, and if the sources are publicly available, it is better than what I can put together after months of work.

https://openai.com/index/introducing-deep-research/

This is currently $200/month and it is putting research assistants, and editorial support stuff for any magazine out of work today. I am not being dramatic at all. This is fact, today.

That said, in a social network, where we get 'paid' for our interaction, how safe is our ecosystem?

What is at stake?

Do we want to talk to a chat bot, or have a conversation with a fellow human?

Answer to these questions are simple to me. If they are simple to you, then community need to come together and at least have the following in place:

- have an option for AI generated content to opt-out of the Hive reward pool

- discourage comment bots 100%

- discourage aggregator posts, especially when posted for rewards

- generally all communities should have a written policy regarding AI generated text

- in my mind, AI generated art is okay, as long as it is not the main focus of the post

I am not puritan. I have seen AI generated videos, and comicbook, that are amazingly good and appropriate. Thanks to @bravetofu. However, it is from Brave I have learned how much effort went into those products to generate that quality. Typically mentioning the fact that something is AI should be mandatory. What I prefer not of have is mindless spam, which is the direct, multiple key hive communities are heading. This must be stopped.