It is a hypothesis put forward by Kurzweil and some others. It is interesting but there are some major safety concerns. If AGI is developed in the wrong way then the benefits might not be shared. Also it might not be possible to develop AGI in a way which can be contained.

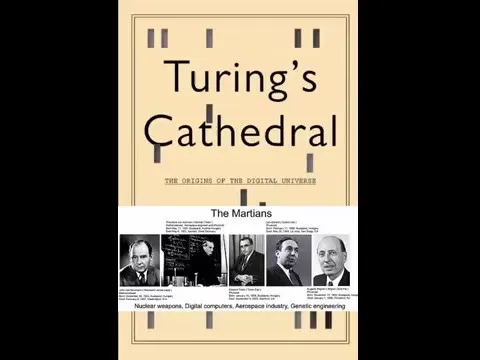

Personally I think a good use case for AGI is a von Neumann Probe.

RE: The Societal Complexity Explosion and Wisdom Asymmetry