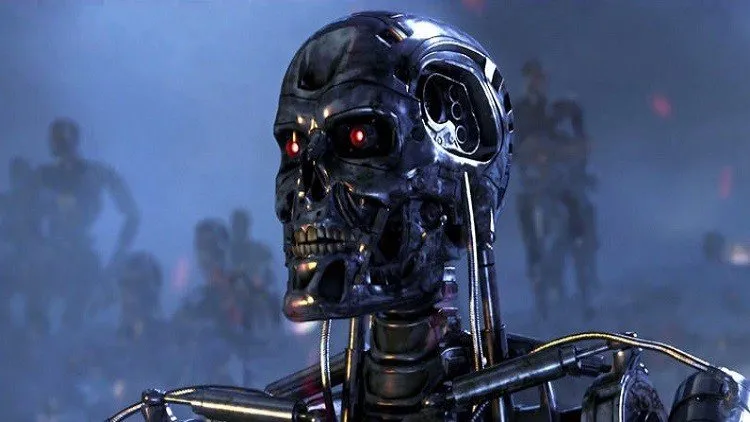

Pioneers of Artificial Intelligence and Robotics signed a memorandum calling on the United Nations to ban lethal electronic weapons known as "deadly robots".

Technology leaders, including Elon Mosk and Mustafa Suleiman, one of the founders of Google's DeepMind program, said the development of such technology would lead to a "third revolution in a world of wars" that could equate to the invention of gunpowder and nuclear weapons.

"After the development of these technologies, lethal robots will expand the scope of conflicts, and within periods of time faster than humans can absorb," the memo said.

The letter was signed by the founders of 116 artificial intelligence companies from 26 countries, published this week before the International Conference on Artificial Intelligence (IJCAI), to coincide with the start of formal UN talks to study the ban.

read more

A debate among technology giants about the end of the world because of artificial intelligence

The use of deadly robots without human intervention is a "moral error," experts who sign the memo say, so their use should be controlled under the CCW Convention of 1983.

The United Nations Agreement regulates the use of a range of weapons, including landmines, incendiary bombs and chemical weapons.

Some countries are currently developing independent lethal weapons, including the United States, China and Israel. Some systems have already been deployed, and towers equipped with machine guns capable of setting targets and firing without human intervention.

At the same time, supporters of these deadly weapons say advanced technology can reduce casualties in combat and can more accurately distinguish between civilians and combatants.

It seems that countries do not wish to slow down the development of these weapons, fearing that they will outperform other countries.