From reading Ned's post announcing 70% staff cuts at Steemit Inc, it is clear that infrastructure costs, particularly the ongoing costs of paying outsourced "cloud" providers for running full node servers supporting APIs are a big problem for Steemit Inc.

This is a problem that arises from centralisation and it can be solved by decentralisation!

It has always concerned me that in a supposedly decentralised environment so many witnesses and Steemit Inc itself were outsourcing to centralised companies running big server farms for the actual hardware that is powering decentralised blockchains like Steem.

There are three four big problems with this:

- Cost 1. It is far more costly in the long run to rent something from someone else than to own it yourself.

- Cost 2. With outsourced "cloud" companies you are paying for density, reliability and redundancy that properly decentralised systems don't need.

- Undermining Decentralisation: Use of out outsourced cloud servers creates centralisation that reduces the security and redundancy of the system.

- Impact of Steem price. If you own your hardware then you can ride out low prices. If your rent it you are committed to fees every month and may need to power down and sell to meet those costs.

@anyx has made a series of posts on these issues and has put his money where his mouth is and created his own gold standard 512Gb Xeon server running a full node with APIs: https://anyx.io.

https://steempeak.com/steem/@anyx/what-makes-a-dapp-a-dapp

https://steempeak.com/steem/@anyx/fully-decentralizing-dapps

https://steempeak.com/steem/@anyx/announcing-https-anyx-io-a-public-high-performance-full-api

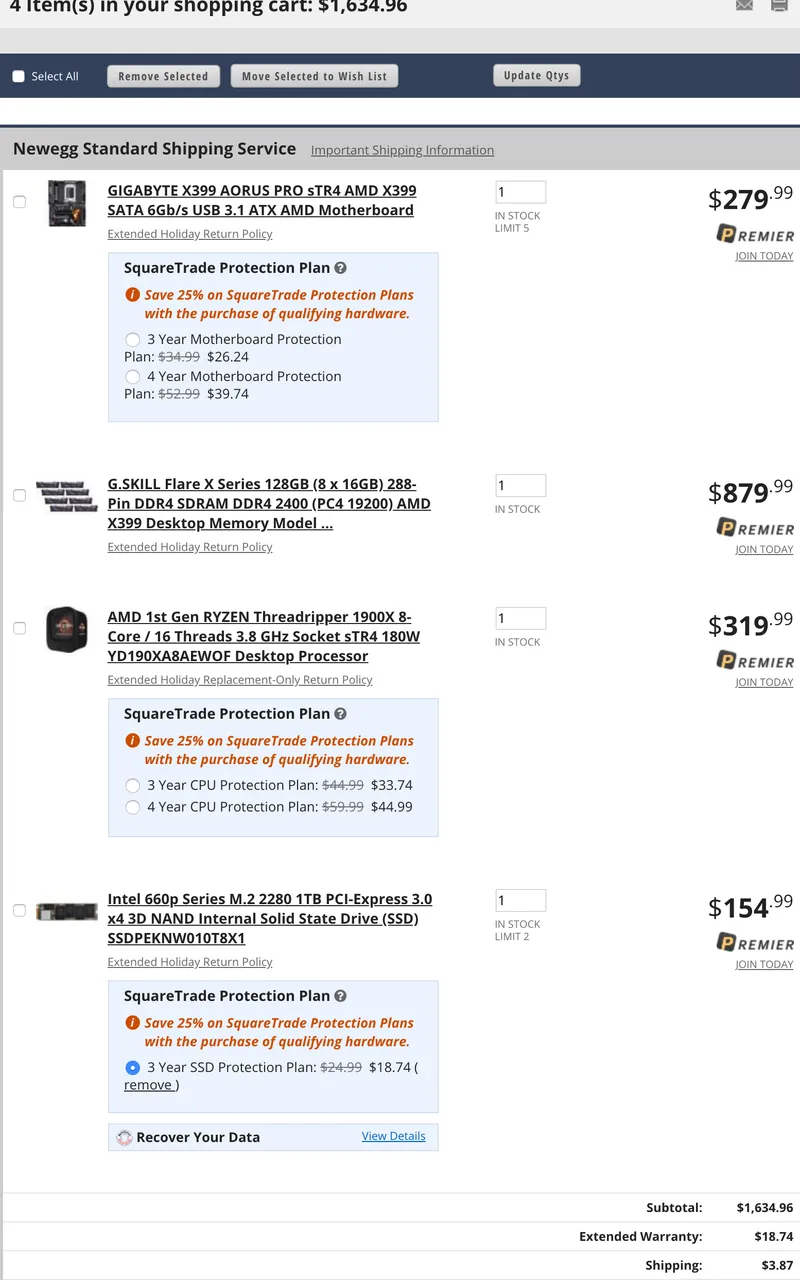

However, as @anyx notes, it is possible to create a witness server running some APIs or a node running lots a APIs using a 128Gb server based on relatively inexpensive High End Desktop (HEDT) systems costing less than $2000.

Doing some further analysis shows that witnesses can run a full node on 3 HEDT class machines. Top 20 Witnesses need 3 servers in any case to run the main steem node, backup node and seed node.

Quoting @anyx

"For Steem, the current usage as of this articles time of writing is 45 GB for a consensus node. Each API you add will require more storage (for example, the follow plugin requires about 20 GB), adding up to 247 GB for a "full node" with all plugins on the same process (note, history consists of an addition 138 GB on disk).

Notably, if you split up the full node into different parts, each will need a duplicate copy of the 45 GB of consensus data, but you can split the remaining requirements horizontally.

With these constraints in mind, commodity machines (which have the highest single core speeds) are excellent candidates for consensus nodes, with Workstation class Xeons coming in a close second."

This means 3 x 128Gb memory servers on HEDT class hardware can run all the APIs needed for a full node. API data is 202 GB (247-45 Gb) split into 68Gb on each machine + 45Gb per machine for consensus data = 113Gb (which is less than 128Gb max for HEDT class machine).