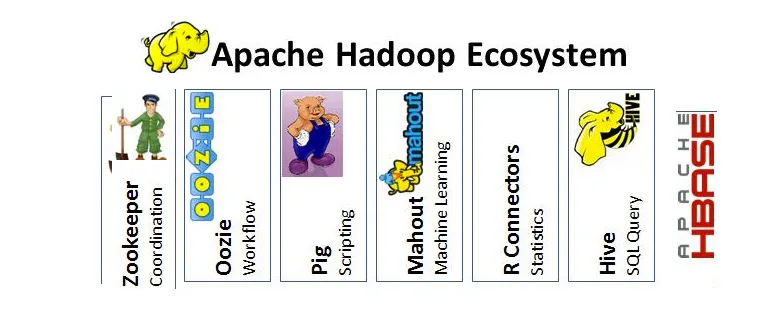

Apache Hadoop

Open-source software for distributed computing

Screenshots

View Image |

|---|

Hunter's comment

Lets say you have to analyse or process huge amount of data , how will you do it ? How many processing units or nodes /computers do you need to attach and how will you make them communicate with each other?

The answer is Hadoop .

It is a framework that allows you to process huge amount of data in a distributed environment .

The two processes involved in any big data processing using Hadoop is Map and Reduce . You can learn more about this by visiting their site .

Link

This is posted on Steemhunt - A place where you can dig products and earn STEEM.

View on Steemhunt.com