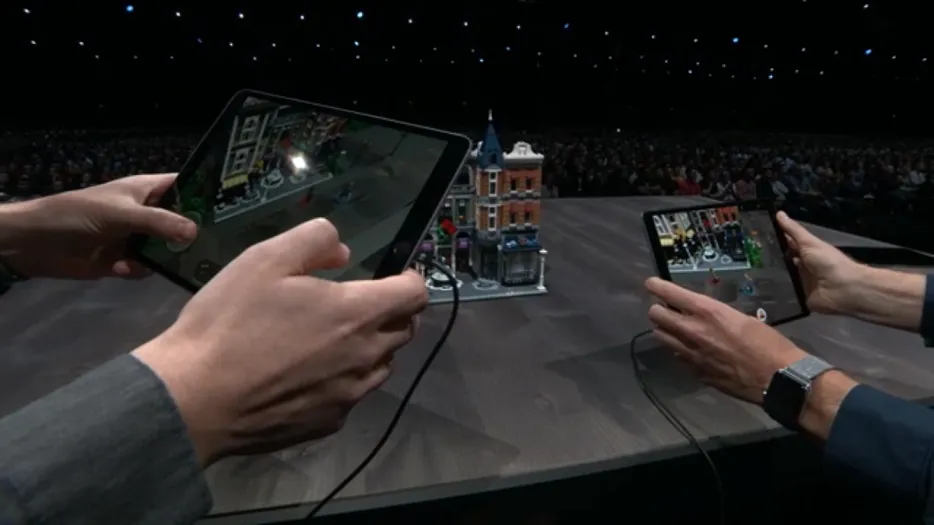

ARKit 2.0

Can Make iPhone Controlled Use Eyes

Screenshots

Hunter's comment

Apple has confirmed to add the latest excellent features for mobile augmented reality apps this fall. Developers have worked very hard to provide a deeper and immersive experience through the introduction of 3D objects.

Apparently, a lot of Mobile Applications AR that can be developed further and better with the utilization of the benefits of this ARKit 2.0 feature.

In the process, matt discovers some unexpected possibilities that can be done with ARKit 2.0. Launched from Mashable, after playing with iOS 12 developers, Matt found that ARKit 2 is able to track the eye quickly. He also wondered whether the tracking is quite appropriate on the iPhone screen in the future. To that end, Matt created a demo showing how far the eye tracking level belongs to ARKit 2.0.

https://twitter.com/thefuturematt/status/1004821303486906369

- thefuturematt

In a demo video uploaded to Twitter, he controls the iPhone with his eyes only, Just by pointing his eyes to the sections he wants to crawl and instead of the pushbutton, Matt just needs to blink.

This technology was created not to make people more lazy, but how Apple facilitates the accessibility of its users while enhancing the smartphone experience. In addition, with this feature, people with disabilities are also able to enjoy the experience of using Apple products.

Link

https://www.apple.com/newsroom/2018/06/apple-unveils-arkit-2/

Contributors

Hunter: @muhammadridwan

This is posted on Steemhunt - A place where you can dig products and earn STEEM.

View on Steemhunt.com