There currently are a lot of buzzwords in the world of technology such as the blockchain, neural networks, artificial intelligence, machine learning and lastly quantum computing. For this post, we will be stepping into the world of one of this amazing technological advancement and that is the world of Quantum Computing. I did make a blog post here about large primes and their application in the world of cryptography due to the inability of existing computer technologies to factor such prime numbers in anytime less than a few light years. But what if I told you that something was cooking that could potentially topple existing encryption systems and force us to find newer ways of securing our data over the internet but promises a lot of advantages such as providing solutions to many scientific questions, boost medical research, transform our financial sector and in a nutshell crush tasks conventional computer systems would take eternities to accomplish.

What are Conventional Computers?

It doesn't matter if it is your mac book pro 2018 model or an analogue calculator the old accountant at your school uses, these are all examples of conventional computers. These are systems that obey the macroscopic laws of physics.

So how do they work?

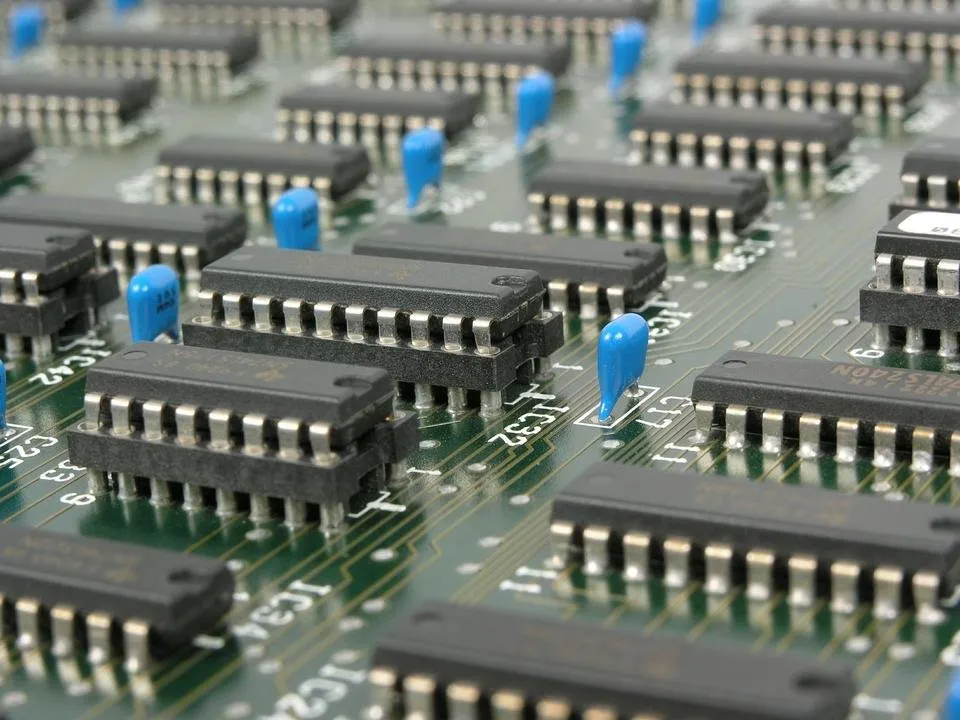

Conventional computers store and process binary digits or bits with the help of transistors which acts as switch gates. Every time the transistor is on, it stores the digit one and when off it stores the digit zero. A combination of these binary digits(0, 1) is used to store any information(letters, numbers, symbols etc) by the computer. Transistors are connected together to form logic gates which enable the computer performs calculations by comparing differences in binary digits stored temporarily in registers(temporary memory).

Over the years, the size of transistors has constantly reduced and the smaller they get the more powerful they have become. Today billions of transistors are squeezed into chips the size of a tooth known as integrated circuits which store and also process data.

As technology advances, there is a need to store and process more information, which means more binary digits of ones and zeros which also translates into billions of transistors required to get the job done. The problem here is that conventional computers can only do one thing at a time (i.e transistors can only hold a single value of either 1 or 0 at a particular instance) which means that it will take a lot of steps which translate into longer time frames to tackle the complex problems we want them to handle. These problems are called intractable problems. Since transistors have become as small as we can possibly make them and intractable problems persist, this seems to be how far conventional computers which are based on conventional laws of physics can take us and hence the need to explore the world of quantum physics for solutions.

What is the quantum theory?

Quantum physics is the aspect of modern physics that has to do with atoms and the subatomic particles contained inside them. In other words, this theory explains the behaviour and nature of matter and energy at this atomic/subatomic level. At this level, the classical or conventional laws of physics are inapplicable. matter in such small state does not behave in the way matter in our everyday world behaves.

If by any chance you have studied light waves, you would have come across the quantum theory. Light is said to behave as though made up of groups of particles or as a series of waves depending on the nature of the obstacle it encounters. This goes against all classical laws of physics known to us as a train will always be a train no matter the condition and can never be a bus or a car simultaneously. This weird phenomenon is known as wave-particle-duality and is one of those concepts realized with the help of quantum theory.

What does this have to do with Computers?

Quantum computing is simply the application of quantum-mechanical phenomena, such as superposition and entanglement in processing data.

Have you ever heard of Moore's Law? According to this law proposed by Intel co-founder Gordon Moore, the computing capabilities of computers double in approximately eighteen months. As this law advances, the number of intractable problems reduces. But in recent times, this law seems to be slower than ever as stated earlier, transistors are now as small as we can possibly make them. For this law to continue to hold true, there has to be a shift in the approach of how our computer systems work from the classical physics to quantum physics.

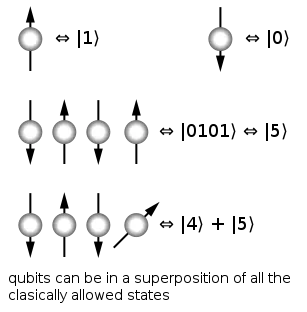

The key feature of conventional computers are binary digits also known as bits which can store either 1 or 0 per time, quantum computing proposes the use of quantum bits or qubits which can store a 1, 0, both 1 and 0 and all possible combination of integers between them all in an instance of time. Just in case you didn't understand that look back at the concept of wave-particle-duality I explained earlier, where a light could act as a particle or as a wave in an instance of time.

Why does this matter?

If a qubit can store multiple numbers in an instance of time, it implies that a quantum computer can process these integers simultaneously. Information can be processed in parallel all at once rather than in series taking one process at a time. If practical, quantum computers will be exponentially faster and more efficient than conventional computers unlocking solutions to a lot of intractable problems in science, mathematics, finance, medicine and computing.

Image Source

The phenomena of quantum mechanics known as superposition is implemented to enable data comptutation

Enough of theories, is Quantum Computing Practical?

Based on my own research, a quantum computer that's capable of replacing conventional computers is still years away but no hope is lost. There has been a lot of progress over the years that take us closer to achieving quantum computing.

The year 2000 saw the production of the first set of qubit machines. Isaac Chuang invented a 5 qubit computing machine and in the same year, a group of researchers were able to add 2 qubits to that to invent the first 7 qubit computer. It was in 2005 that the first quantum computer capable of manipulating a qubyte(8 qubits) was developed by researchers at the University of Innsbruck. These were little but crucial steps. Over the years, more research has been carried out and larger numbers of qubits have been reached.

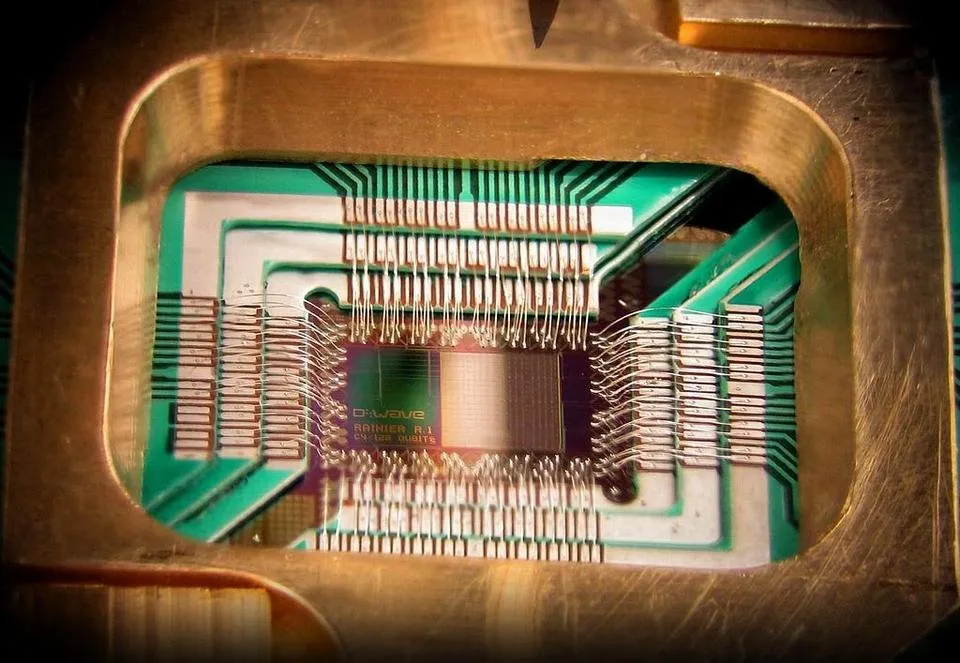

In 2011, a Canadian company known as D-Wave Systems told the world that it had produced a 128 qubit quantum computer. This announcement received a lot of criticism as to whether D-Wave's computer did demonstrate the quantum behaviour.

Despite this criticism, Google and Nasa went ahead to purchase these machines for around 10 million dollars for the sole purpose of developing their own computers based on D-Waves systems. It is believed that these computers do not really demonstrate quantum behaviours at the moment but tend to lean more towards conventional computing. It has also been found that while existing quantum systems might be ten times faster than conventional systems, they are sometimes a hundred times slower and very unstable due to the unstable nature of the qubit. Apart from Google and Nasa, big players like IBM and Microsoft are actively involved in researches geared at achieving quantum systems.

Image Source - Photo of a 128 Qubit chip constructed by D-Wave Systems Inc.

In Conclusion

The future is bright for quantum computing but as of yet most of what we have is theoretical, it might be today, tomorrow or maybe never and quantum computing is not the solution to our intractable problems but either way, Moore's law will be obeyed. It is worthy of note that Microsofts co-founder, Bill Gate is intrigued by quantum computing and believes that it is the future.

References

Defination of Quantum Computing

Quantum Theory

D-Wave's Quantum Computers

How Practical are Quantum Computers

Moore's Law