The new questionnaire for the Translation category went live today. With everyone from Utopian and DaVinci already spending a lot of time on it to make the sheet as helpful and thorough possible, there is always room for improvements!

The new questionnaire sparked some questions and a nice discussion on discord with @dimitrisp and @aboutcoolscience which resulted in this post, which will discuss some parts of the questionnaire and the future help document to accompany it.

The questionnaire

Some of the early changes to the questionnaire included merging a question about major and minor mistakes (now question 2), a change of words in question 3 and the merge of two questions that became question 4. To sum them up here:

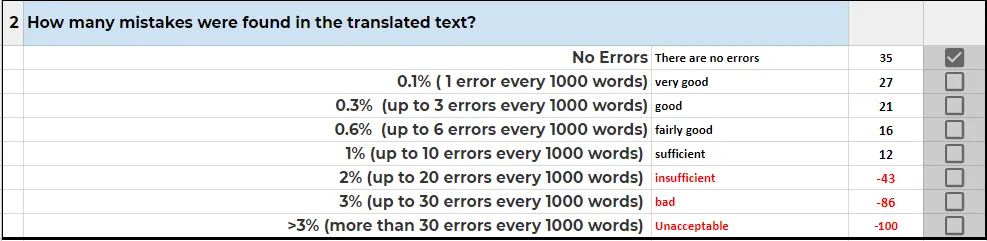

2- How many mistakes were found in the translated text?

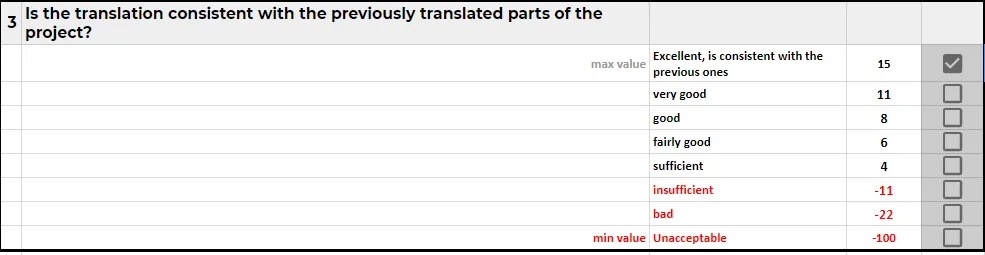

3- Is the translation consistent with the previously translated parts of the project?

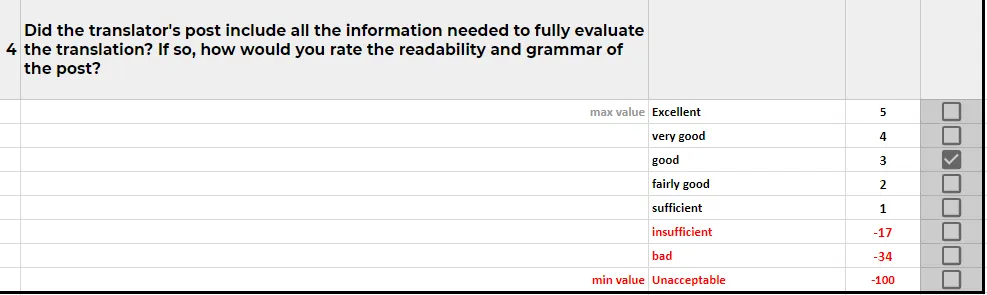

4- Did the translator's post include all the information needed to fully evaluate the translation? If so, how would you rate the readability and grammar of the post?

2- How many mistakes were found in the translated text?

The merge of questions about minor and major mistakes is logical but still creates some possible problems: what counts as a mistake? Because of the way the score is calculated, there is quite a big difference between making 1, 3 or 6 mistakes. This poses the following questions:

- is a typo a mistake?

- is a repeated mistake counted once or every time?

- is a possible better word cause to count a mistake?

Personally, I would answer the following way:

- is a typo a mistake?

No, but repeated typos can be counted as one. (Minor mistakes?) - is a repeated mistake counted once or every time?

Once, as long as it isn't a mistake that has to be corrected too often, and not after the language moderator has mentioned it to the translator (so not in a following translation) - is a possible better word cause to count a mistake?

No, better words are often a matter of subjectivity.

This would be something to put in the help document, because there's a lot of different types of mistakes that do need a consistent way of handling across all languages and projects.

3- Is the translation consistent with the previously translated parts of the project?

As mentioned earlier, the first thing that changed here was the wording. This question seems to be a very appropriate question in some cases, for instance:

- multiple translators working on the same project

- a very large project

- a change of translators happening after a while

- a translator took a long break working on a project.

Other than in these instances, the translator's style should be consistent every time.

4- Did the translator's post include all the information needed to fully evaluate the translation? If so, how would you rate the readability and grammar of the post?

This question follows an if/else format, where the first part of the question is required or else the score will be set to unacceptable: if the translator did not include all information needed to fully evaluate the translation, the translation cannot be evaluated. Easy as that.

As can be seen from the scores you get for this question, considerably less attention is given to the post compared to the translation itself. This has been requested specifically by the language moderators, because in all honesty the post is mainly there because it is needed, it doesn't serve a goal of entertaining the readers. Therefor, though there is a lot that could be said about a post, we look at the readability and grammar because it shows in an instant whether or not a translator is capable of what they're actually doing.

The help document

What stands out most to me is the need for examples. Most of the questions follow a grading system from excellent to unacceptable. Though of course slightly objective, it's easy enough to see when a post is excellent (no mistakes, engaging, consistent, etc) or unacceptable (a lot of mistakes, inconsistent, etc).

The problem rises when you have to decide whether the accuracy of the translation is very good, good, fairly good or sufficient. These are still all "passing grades", scores being 19, 15, 12 and 9. Though again comparing extremes with each other (very good versus sufficient) might provide some insights, you can't keep comparing the extremes. So there is a need for an examplary post that shows when strings are excellent and when they are, well, any of the other options.

Which raises another issue, as discussed with @dimitrisp: this is different for every language. Different languages of course follow different rules. There really isn't a one size fits all rulebook for these questions, and as such it might become the task of the language moderators to make their own examples. This would help their translators, but still leave room for bias or subjectivity. The solution? I'm not sure!

There is something to be said for a standard sentence that gets translated and, if needed, changed for the different languages, to keep the general thought intact. Would that solve it? I don't know.