logo by @camiloferrua

Repository

https://github.com/to-the-sun/amanuensis

The Amanuensis is an automated songwriting and recording system aimed at ridding the process of anything left-brained, so one need never leave a creative, spontaneous and improvisational state of mind, from the inception of the song until its final master. The program will construct a cohesive song structure, using the best of what you give it, looping around you and growing in real-time as you play. All you have to do is jam and fully written songs will flow out behind you wherever you go.

If you're interested in trying it out, please get a hold of me! Playtesters wanted!

New Features

- What feature(s) did you add?

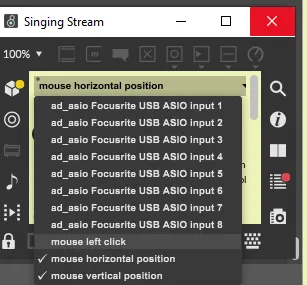

The Singing Stream is a vital aspect of The Amanuensis, allowing you to turn any stream of data into MIDI notes. An obvious stream has been missing up until this point however: data from the computer's mouse. Now the horizontal position, vertical position, as well as mouse clicks can be utilized, allowing you to use your mouse as your instrument.

Combined with an eye tracker, this allows you to play notes based solely on where your eyes are looking on the screen, leaving your hands free to play any other instruments at the same time.

- How did you implement it/them?

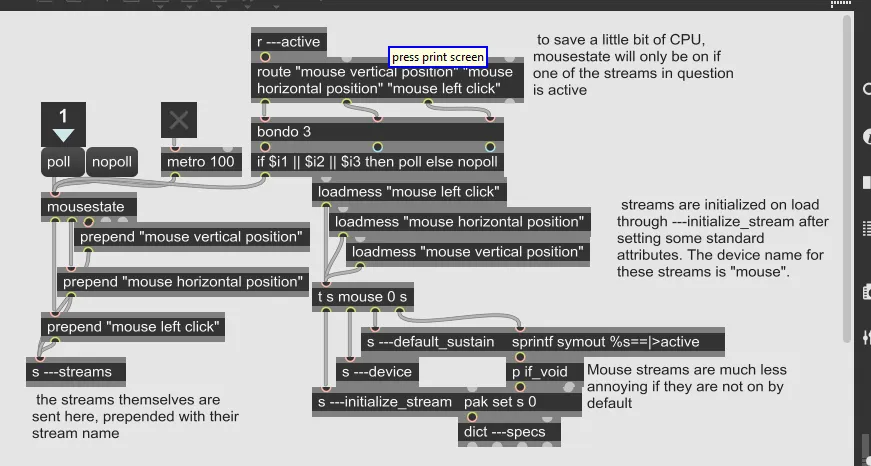

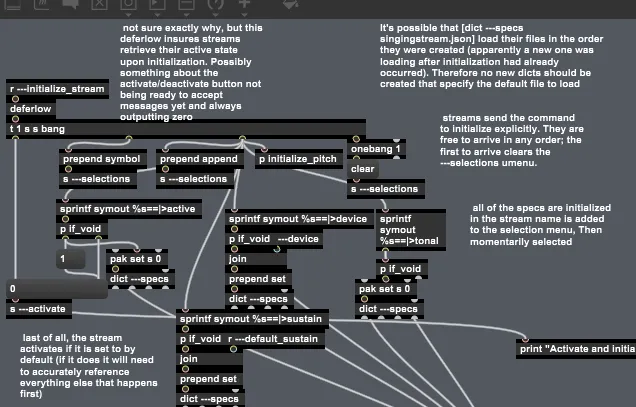

If you're not familiar, Max is a visual language and textual representations like those shown for each commit on Github aren't particularly comprehensible to humans. You won't find any of the commenting there either. Therefore, I will present the work that was completed using images instead. Read the comments in those to get an idea of how the code works. I'll keep my description here about the process of writing that code.

These are the primary commits involved:

A new subpatcher was created to handle the mouse functionality. It operates in much the same way as the hid (human interface devices, specifically game controllers and the like) subpatcher does, except that it can be assumed that all computers have an operating mouse, so the streams can be initialized by default rather than having to wait to see each new control come in. This simplifies things a bit, for example alleviating the need for dynamic scripting.

the new mouse subpatcher, complete with commenting

In addition, the process of initialization has been reworked to be less convoluted and more streamlined. Previously the audio driver streams were arbitrarily chosen as the first to initialize and only after that were the other streams allowed to go ahead. This basically served the purpose only of ensuring the UI menu listing all the sources was cleared at the appropriate time and not re-cleared later on.

As more and more stream sources have been added, this led to a cumbersome chain of events, so I decided to rework the system so that all sources are free to "race" towards initialization, but the first to make it is the one to clear the menu.

In the process ---initialize_stream was instituted, which each stream now uses to explicitly initialize itself. Before, the streams themselves were continually monitored, looking for new ones, and when one was seen, it was initialized. This was not at all efficient and even seemed to start failing in inexplicable ways when the mouse streams were added, which is the main reason I decided to bypass the whole issue with this renovation.

the reworked initialize subpatcher, complete with commenting. Each of the other stream source subpatchers have also been reworked (in a manner resembling p mouse in the first screenshot above) to utilize ---initialize_stream, which is received here