Ai costs are dropping like a rock. This is why the expansion is taking place.

When we look at where things stood a couple years ago, we see a radical change. This is what causes exponentials. Fortunately, for the AI world, this does not appear to be slowing down.

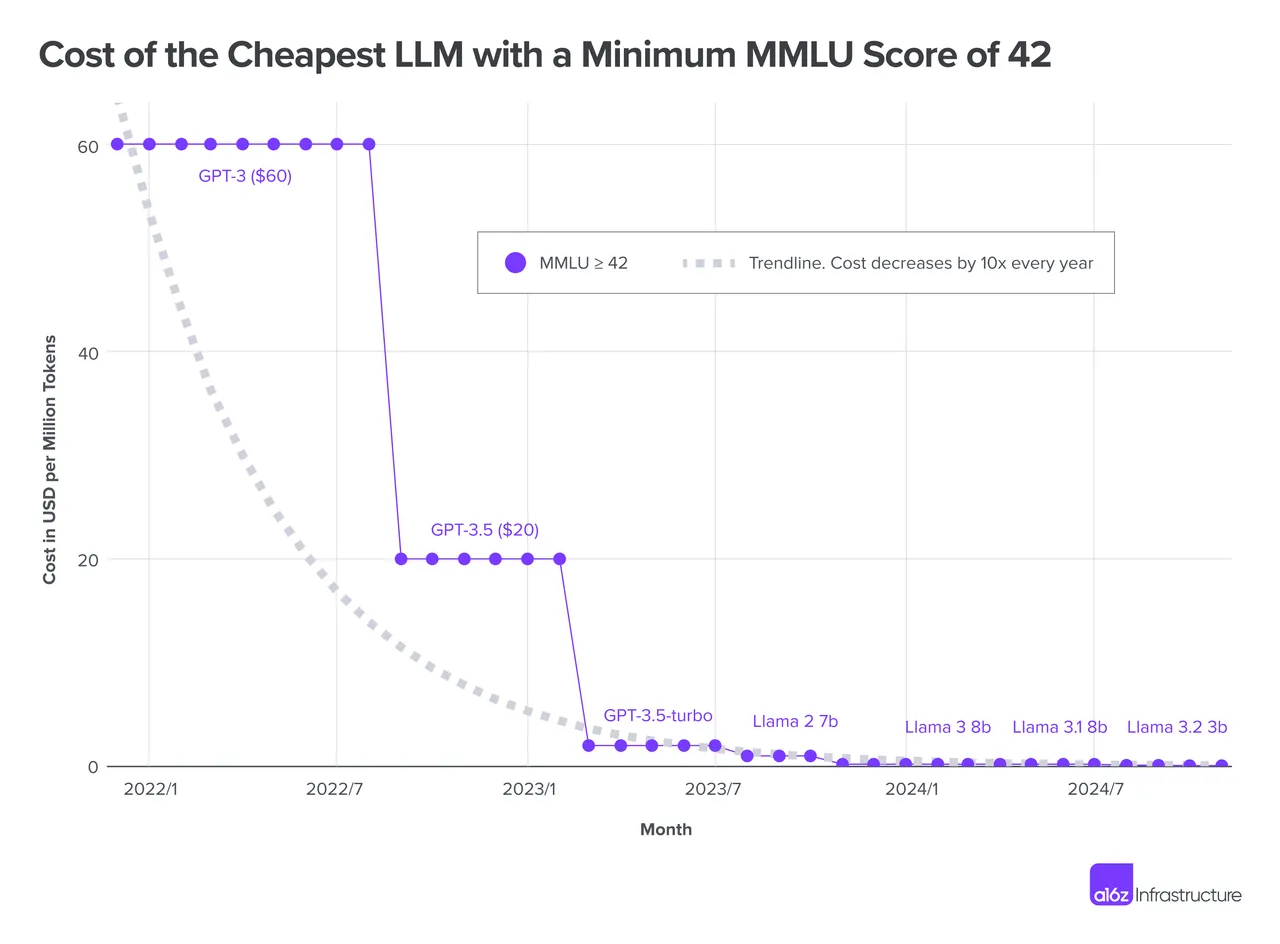

Here is a chart of the cost of LLM over the past couple years. You can see it went from $60 per million tokens when ChatGPT 3.0 came out to a few cents today.

We have to consider the magnitude of this shift. We are looking at an unfathomable drop in cost. Not only are we seeing this on the model side, but inference is following a similar trend.

The Cost of AI Plummeting

It is often hard to understand how things are changing until they are quantified. There is a reason LLM development is relegated to large corporations. There is an enormous expense. While the costs had dropped, the number of tokens required to train a model keeps growing.

We are likely to see a point in time where models are trained on more than 100 trillion tokens. At $60 per million tokens, this would be impossible for even the largest corporations.

But now, with the costs continuing to drop, we can see how it is at least feasible.

Then we have the secondary benefits that come from more advanced algorithm and structural design. All of this is starting to bring the ideas of "reasoning" and "thinking" into play.

As these cost keep forging this path, we can see how smaller entrants can enter. The costs associated with training anything is dropping.

That means, in 2025, the cost to train ChatGPT 3.0 is a fraction of what OpenAI spent. Naturally, this is outdated technology in the sense that other models surpassed it. However, for specialized use cases, this is a gold mine.

Smaller Models

We are seeing this used for smaller models. Specialization is something that we will see over the next couple years.

For example, a legal model in the United States could be built for real estate law. The training would include all the data relating to real estate contracts. All this data would be fed in and train a specific application. An underlying LLM would being incorporated for general stuff.

This is something that will end up being utilized by real estate personnel. It is where specialization enables the model to be more "knowledgeable" while having better alignment (less hallucinations) as compared to larger ones.

We are going to see this process repeating itself. The larger models will continue to push the "AI race" whereas the smaller ones will be revenue generators. My guess is monetization on the larger ones come from integration into real world items, ie conversation pieces.

This is going to make the next couple years even more impressive than what we saw from 2024. It is only accelerating.